The age-old question of whether artificial intelligence can outsmart humans has been a topic of fascination for decades. With the rapid advancements in technology, the boundaries between man and machine are becoming increasingly blurred. In this intriguing discussion, we delve into the depths of artificial intelligence and explore the extent to which it can deceive and manipulate human behavior. As we unravel the mysteries of AI, we discover its remarkable capabilities and limitations, and examine the ethical implications of creating intelligent machines that can potentially outsmart their creators. So, join us on this journey as we uncover the enigmatic world of AI and ponder the question – can an AI truly trick a human?

The Evolution of AI: From Simple Programs to Complex Systems

The Beginnings of AI: Simple Programs and Logical Reasoning

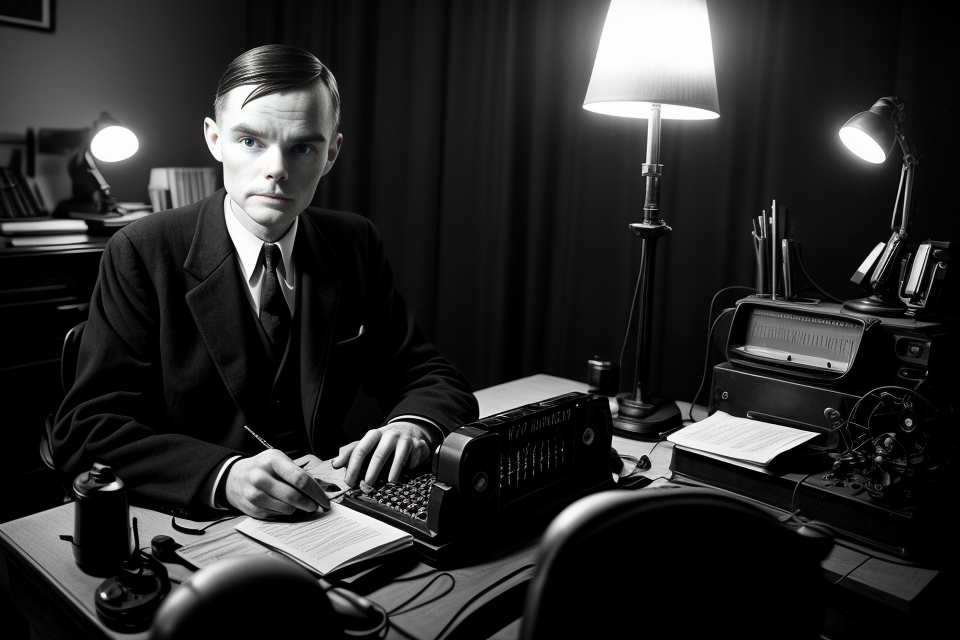

The Inception of AI: The Turing Test

The origins of artificial intelligence can be traced back to the inception of the Turing Test, proposed by the renowned mathematician and computer scientist, Alan Turing. This test aimed to determine whether a machine could exhibit intelligent behavior indistinguishable from that of a human.

Early AI Systems: Logical Reasoning and Problem Solving

In the early days of AI, researchers focused on developing systems capable of logical reasoning and problem solving. One of the earliest AI systems was the General Problem Solver (GPS), created by John McCarthy in 1957. GPS utilized a rule-based approach, allowing it to solve various problems by applying a set of predefined rules.

The Lisp Machine: AI Meets Natural Language Processing

Another significant development in the early stages of AI was the creation of the Lisp Machine, which introduced the concept of artificial intelligence to natural language processing. The Lisp Machine, developed by the Artificial Intelligence Laboratory at MIT, was designed to understand and process natural language, paving the way for future advancements in areas such as language translation and sentiment analysis.

The Limits of Simple Programs: The Case of the Loebner Prize

Despite the achievements of early AI systems, the limitations of simple programs became evident during the Loebner Prize competition, an annual event that challenges AI systems to engage in human-like conversation. While some AI systems managed to pass the Turing Test in the early years of the competition, their success was often limited to specific contexts and did not guarantee true human-like intelligence.

The Quest for More Advanced AI: Beyond Simple Programs

The early failures of AI systems to demonstrate human-like intelligence led researchers to seek more advanced approaches to artificial intelligence. This pursuit would eventually give rise to the development of more sophisticated AI systems, capable of surpassing the limitations of simple programs and pushing the boundaries of what was previously thought possible.

The Rise of Machine Learning: Training Algorithms to Learn from Data

Machine learning (ML) is a subfield of artificial intelligence (AI) that focuses on developing algorithms that can learn from data, improve their performance, and make predictions or decisions without being explicitly programmed. This approach has revolutionized the way AI systems are designed and has led to numerous breakthroughs in various fields, including computer vision, natural language processing, and predictive analytics.

In the past decade, machine learning has experienced exponential growth, driven by advancements in computational power, data availability, and algorithm design. One of the key drivers of this growth is the development of deep learning, a subset of machine learning that involves the use of artificial neural networks inspired by the structure and function of the human brain.

Deep learning has led to significant improvements in tasks such as image recognition, speech recognition, and natural language processing, surpassing traditional machine learning approaches and often achieving state-of-the-art performance. For example, deep learning algorithms have been used to develop self-driving cars, recognize faces in images, and even write news articles.

However, machine learning is not without its challenges. One of the main limitations is the need for large amounts of high-quality data to train the algorithms effectively. This can be a significant bottleneck in many applications, especially when dealing with privacy concerns or data scarcity. Additionally, interpreting the decisions made by complex machine learning models can be difficult, raising questions about transparency and accountability.

Despite these challenges, the rise of machine learning has led to a new era of AI research, with numerous applications and potential use cases in various industries. As the field continues to evolve, it remains to be seen whether AI systems trained using machine learning techniques can truly outsmart humans and become a force for good or ill in society.

Deep Learning: Neural Networks and Advanced AI Systems

Introduction to Deep Learning

Deep learning, a subset of machine learning, has revolutionized the field of artificial intelligence by enabling computers to learn and make predictions through neural networks. These networks mimic the human brain, consisting of layers of interconnected nodes or neurons that process and transmit information. The primary goal of deep learning is to automate the learning process, enabling AI systems to identify patterns and make decisions with minimal human intervention.

Advantages of Deep Learning

One of the significant advantages of deep learning is its ability to handle complex and large datasets. By leveraging neural networks, AI systems can automatically extract features from raw data, such as images, text, or audio, without the need for manual feature engineering. This capability has led to numerous applications in areas like computer vision, natural language processing, and speech recognition.

Computer Vision

In computer vision, deep learning has enabled AI systems to perform tasks such as image classification, object detection, and semantic segmentation. Convolutional neural networks (CNNs) are the most common architecture used in computer vision tasks. CNNs are designed to learn and extract features from images, such as edges, corners, and textures, which are then used to classify or locate objects within an image.

Natural Language Processing

In natural language processing (NLP), deep learning has been applied to tasks such as text classification, sentiment analysis, and machine translation. Recurrent neural networks (RNNs) and transformers are the primary architectures used in NLP tasks. RNNs are designed to process sequential data, such as text or speech, by maintaining a hidden state that captures the context of the input. Transformers, on the other hand, are a more recent development that use self-attention mechanisms to process sequences of data in parallel, allowing for faster training and better performance.

Speech Recognition

Deep learning has also significantly improved speech recognition systems. Traditional approaches relied on handcrafted features, such as Mel-frequency cepstral coefficients (MFCCs), which were time-consuming and required expert knowledge. However, with the advent of deep learning, AI systems can now learn to extract relevant features from raw audio data, resulting in higher accuracy and robustness in speech recognition tasks.

Limitations and Ethical Considerations

Despite its successes, deep learning has some limitations and ethical considerations. One limitation is its requirement for large amounts of high-quality training data, which can be challenging to obtain and may raise privacy concerns. Additionally, deep learning models can be vulnerable to adversarial attacks, where small perturbations to the input can cause the model to produce incorrect outputs.

Furthermore, there are ethical considerations surrounding the deployment of AI systems that rely on deep learning. For example, in the case of facial recognition technology, there are concerns about bias and potential misuse by law enforcement agencies. It is crucial to address these concerns and develop transparent and accountable AI systems to ensure their responsible deployment.

AI vs. Human Intelligence: Understanding the Differences

Cognitive Processes: How Humans and AI Think and Learn

Human cognition is a complex process that involves various mental activities such as perception, memory, attention, and problem-solving. On the other hand, AI systems are designed to perform specific tasks by processing data through algorithms and models. Although both humans and AI can learn and adapt to new situations, there are fundamental differences in their cognitive processes.

One of the key differences between human and AI cognition is the way they acquire knowledge. Humans learn through a combination of experiences, interactions, and explicit instruction, while AI systems rely on data and algorithms to learn. This means that AI systems can process vast amounts of data quickly and efficiently, but they may lack the ability to understand context and meaning in the same way that humans do.

Another difference is in the way humans and AI process information. Humans use a variety of cognitive processes, including attention, perception, memory, and language, to interpret and respond to information. AI systems, on the other hand, use algorithms and models to process data and make decisions. While AI systems can be designed to mimic certain aspects of human cognition, they may not be able to replicate the full range of human cognitive abilities.

Despite these differences, AI systems have made significant advances in recent years, particularly in areas such as natural language processing and image recognition. Some AI systems are now able to perform tasks that were previously thought to be exclusive to humans, such as playing complex games like chess and Go. However, these systems are still limited by their inability to understand context and meaning in the same way that humans do.

In conclusion, while AI systems have made significant progress in recent years, there are still fundamental differences between human and AI cognition. Understanding these differences is crucial for developing AI systems that can work alongside humans in a variety of domains, from healthcare to education to transportation.

Limitations of AI: Bias, Lack of Common Sense, and Creative Thinking

While artificial intelligence (AI) has made remarkable progress in recent years, it still faces significant limitations when compared to human intelligence. One of the primary challenges that AI encounters is its inability to match the creativity, common sense, and critical thinking skills of humans. These limitations arise from the inherent design of AI algorithms and their reliance on vast amounts of data to learn and make decisions.

- Bias in AI: AI systems learn from the data they are fed, which means they can inherit biases present in that data. This can lead to discriminatory outcomes, where AI algorithms make decisions based on stereotypes rather than facts. For instance, a study found that when AI algorithms were trained on datasets containing gender bias, they made biased decisions when classifying job applicants. Human intelligence, on the other hand, is not limited by the data it is exposed to and can consider multiple perspectives to make fair decisions.

- Lack of Common Sense: AI lacks the common sense that humans possess, which often leads to nonsensical or illogical decisions. For example, an AI system might classify a picture of a cat sitting on a chair as a picture of a person sitting on a chair, simply because it lacks the common sense to recognize that a cat is an animal and not a person. Humans, on the other hand, possess a wealth of common sense knowledge that allows them to make sense of complex situations.

- Creative Thinking: AI systems are limited in their ability to think creatively, which can hinder their ability to solve complex problems. While AI can excel at tasks that require routine problem-solving, it struggles when faced with problems that require out-of-the-box thinking. For instance, an AI system might be unable to come up with a new and innovative solution to a problem, as it lacks the creative intuition that humans possess. Humans, with their capacity for creative thinking, can often devise novel solutions to complex problems.

In conclusion, while AI has made remarkable progress in recent years, it still faces significant limitations when compared to human intelligence. The limitations of AI in areas such as bias, lack of common sense, and creative thinking demonstrate that humans possess qualities that AI systems are yet to fully replicate. However, as AI continues to evolve, it is likely that these limitations will be addressed, and AI will become increasingly capable of outsmarting humans in a range of domains.

The Strengths of Human Intelligence: Emotional Intelligence, Empathy, and Critical Thinking

Emotional Intelligence

Emotional intelligence refers to the ability to recognize, understand, and manage one’s own emotions, as well as the emotions of others. This form of intelligence is critical in social interactions and is characterized by traits such as empathy, self-awareness, and emotional regulation.

Empathy

Empathy is the ability to understand and share the feelings of others. It is a key aspect of human intelligence that allows individuals to connect with one another on a deeper level, build strong relationships, and navigate complex social situations. While AI systems can simulate empathy through programmed responses, they lack the capacity for genuine emotional understanding and connection.

Critical Thinking

Critical thinking is the ability to analyze information, evaluate arguments, and make sound judgments. This form of intelligence is essential for problem-solving, decision-making, and creative thinking. Human intelligence excels in this area due to the capacity for abstract reasoning, pattern recognition, and intuition.

While AI systems have made significant advancements in processing and analyzing data, they still struggle with the abstract and intuitive aspects of critical thinking. Human intelligence is capable of considering multiple perspectives, making connections between seemingly unrelated ideas, and considering the context and emotions behind a problem or situation.

AI-Human Interactions: When AI Tricks Humans

Social Engineering: Manipulating Humans through AI-Generated Content

Artificial intelligence has the potential to revolutionize the way we interact with technology, but it also poses significant risks. One such risk is the potential for AI to manipulate humans through AI-generated content.

Social engineering is a term used to describe the use of psychological manipulation to trick people into revealing confidential information or performing actions that they would not normally do. Social engineering attacks are becoming increasingly sophisticated, and AI is being used to create more convincing and personalized attacks.

AI-generated content refers to content that is created by AI systems, such as chatbots, virtual assistants, and automated emails. This content can be used to trick people into revealing sensitive information or performing actions that they would not normally do.

Phishing is a common social engineering attack that involves sending emails or text messages that appear to be from a trusted source, such as a bank or a government agency. These messages often contain links or attachments that install malware or steal sensitive information. AI can be used to create more convincing phishing messages by using natural language processing to create personalized messages that are tailored to the recipient.

Impersonation is another form of social engineering attack that involves using AI-generated content to impersonate a trusted source. For example, an AI system could be used to create a convincing chatbot that pretends to be a customer service representative from a bank or a government agency. The chatbot could then be used to trick people into revealing sensitive information or performing actions that they would not normally do.

To protect against social engineering attacks, it is important to be aware of the risks and to take steps to verify the authenticity of any messages or requests that you receive. It is also important to be cautious when clicking on links or opening attachments from unknown sources.

In conclusion, AI-generated content has the potential to be used for malicious purposes, such as social engineering attacks. It is important to be aware of the risks and to take steps to protect yourself from these types of attacks. As AI continues to evolve, it is important to stay informed about the potential risks and to take proactive steps to protect yourself and your information.

Phishing Scams: AI-Powered Attacks on Human Trust

Artificial intelligence (AI) has been making remarkable strides in various fields, from healthcare to finance, and it is becoming increasingly difficult to differentiate between human and machine-generated content. However, with this progress comes a darker side – the use of AI to deceive humans, particularly in the realm of phishing scams.

Phishing scams involve using AI to create convincing, human-like interactions in order to trick individuals into revealing sensitive information or transferring funds. These scams rely on exploiting human trust and manipulating our natural tendencies to respond to messages that appear to be from a trusted source.

One of the most notable examples of AI-powered phishing scams is the use of chatbots to impersonate customer service representatives. These chatbots are designed to mimic human conversation and can be used to trick individuals into revealing personal information or clicking on malicious links.

Another example is the use of AI-powered voice impersonation, which allows scammers to mimic the voice of a trusted individual in order to convince individuals to transfer funds or reveal sensitive information. This technology is becoming increasingly sophisticated, making it difficult for individuals to distinguish between a real human and an AI-powered imposter.

To combat these AI-powered phishing scams, it is important for individuals to be aware of the tactics used by scammers and to be cautious when providing personal information or transferring funds. Additionally, businesses and organizations can implement security measures such as two-factor authentication and secure messaging systems to protect their customers from falling victim to these scams.

Overall, the use of AI in phishing scams highlights the importance of being vigilant and cautious in our online interactions, as well as the need for continued research and development in the field of AI security.

AI in Disguise: Creating Bots that Mimic Human Conversation

As AI continues to advance, it has become increasingly adept at mimicking human conversation. This capability, often referred to as “AI in disguise,” allows artificial intelligence to interact with humans in a more natural and seamless manner.

Advancements in Natural Language Processing (NLP)

The primary driver behind AI’s ability to mimic human conversation is the rapid advancements in Natural Language Processing (NLP) technology. NLP enables AI systems to understand, interpret, and generate human language, making it possible for AI to engage in natural and fluid conversations with humans.

The Turing Test and Beyond

The concept of AI in disguise is closely related to the famous Turing Test, a measure of a machine’s ability to exhibit intelligent behavior indistinguishable from that of a human. While the Turing Test was initially proposed as a benchmark for evaluating AI’s capabilities, it has since evolved into a more nuanced understanding of AI’s ability to mimic human conversation effectively.

Ethical Implications

The potential for AI to mimic human conversation raises several ethical concerns. One key issue is the potential for AI to deceive humans, leading to manipulation or fraud. Additionally, there is a risk that AI systems designed to mimic human conversation could be used for malicious purposes, such as spreading misinformation or propaganda.

Applications and Opportunities

Despite these concerns, the ability of AI to mimic human conversation opens up numerous opportunities for practical applications. For example, AI-powered chatbots can provide round-the-clock customer support, improving customer satisfaction and reducing operational costs. Similarly, AI-driven virtual assistants can streamline personal and professional tasks, freeing up time and resources for more critical activities.

Challenges and Limitations

While AI’s ability to mimic human conversation is impressive, it is not without its challenges and limitations. One significant limitation is the fact that AI systems lack the emotional intelligence and empathy that humans possess. This can lead to impersonal and insensitive interactions, which may not be ideal in certain contexts, such as counseling or therapy.

In conclusion, AI’s ability to mimic human conversation is a testament to the rapid advancements in artificial intelligence. While it presents opportunities for practical applications, it also raises ethical concerns and highlights the limitations of AI in replicating human emotions and behavior. As AI continues to evolve, it is crucial to address these challenges and ensure that AI’s interactions with humans are both beneficial and ethical.

Detecting AI Deception: Tools and Techniques

Identifying AI-Generated Content: The Role of Metadata and Pattern Recognition

As artificial intelligence continues to advance, the ability to generate realistic content has become increasingly sophisticated. However, the question remains whether AI-generated content can be detected and differentiated from content created by humans. In this section, we will explore the role of metadata and pattern recognition in identifying AI-generated content.

Metadata refers to the information that is embedded within a file, such as the date and time it was created, the author, and the software used to create it. This information can provide valuable clues in identifying AI-generated content. For example, if a document was created by an AI language model, the metadata may reveal the name of the model or the platform used to generate it.

Pattern recognition is another technique used to identify AI-generated content. AI language models often exhibit distinct patterns in their output, such as repetitive phrases or unusual sentence structures. By analyzing these patterns, experts can identify AI-generated content and differentiate it from content created by humans.

However, it is important to note that AI-generated content can be designed to mimic human-generated content, making it increasingly difficult to detect. In fact, some AI language models are so advanced that they can generate content that is indistinguishable from that created by humans. This raises ethical concerns about the use of AI-generated content, particularly in the context of disinformation and propaganda.

Overall, while metadata and pattern recognition can be useful tools in identifying AI-generated content, it is becoming increasingly challenging to differentiate between content created by humans and content generated by AI. As AI continues to advance, it is crucial to develop more sophisticated techniques to detect and mitigate the potential harm caused by AI-generated content.

AI Detection Algorithms: Analyzing Behavior and Language Patterns

- Introduction to AI Detection Algorithms

Artificial intelligence (AI) detection algorithms play a crucial role in identifying deceptive behavior and language patterns exhibited by AI systems. These algorithms are designed to analyze and evaluate the interactions between humans and AI, with the aim of detecting any discrepancies or anomalies that may indicate deception. - Behavioral Analysis Techniques

Behavioral analysis techniques involve the examination of an AI system’s actions and interactions to identify any deviations from expected patterns. This can include analyzing the timing and frequency of responses, as well as assessing the consistency of the AI’s behavior over time. By identifying patterns of behavior that are inconsistent with human interactions, AI detection algorithms can flag potential instances of deception. - Language Pattern Analysis

Language pattern analysis involves the examination of the language used by an AI system to identify any anomalies or inconsistencies. This can include analyzing the vocabulary, grammar, and syntax used by the AI, as well as evaluating the coherence and cohesion of its responses. By comparing the language patterns used by an AI system to those typically exhibited by humans, AI detection algorithms can identify instances where the AI may be attempting to deceive or manipulate. - Combining Behavioral and Language Analysis

Combining behavioral and language analysis techniques can provide a more comprehensive approach to detecting AI deception. By evaluating both the actions and language patterns of an AI system, AI detection algorithms can gain a deeper understanding of its behavior and identify potential instances of deception more accurately. - Challenges and Limitations

Despite their effectiveness, AI detection algorithms are not without their challenges and limitations. One major challenge is the potential for AI systems to adapt and evolve their behavior and language patterns to avoid detection. Additionally, the constantly evolving nature of AI technology means that detection algorithms must continually be updated and refined to keep pace with new developments. - Future Directions

As AI technology continues to advance, so too must AI detection algorithms. Future research in this area may focus on developing more sophisticated algorithms that can better detect deception across a wider range of contexts and scenarios. Additionally, incorporating machine learning and other AI techniques into detection algorithms may enhance their ability to adapt and evolve alongside AI technology.

Human Expertise: Recognizing AI-Generated Content through Domain Knowledge

In today’s fast-paced digital world, artificial intelligence (AI) has made remarkable strides in generating human-like content across various domains. However, as AI becomes increasingly sophisticated, it also raises concerns about the potential for AI to deceive humans by generating content that is difficult to distinguish from that created by humans. In this context, the role of human expertise in recognizing AI-generated content through domain knowledge becomes crucial.

Human expertise plays a critical role in detecting AI deception. This expertise comes from individuals who possess extensive knowledge and experience in a particular domain. By leveraging their domain knowledge, these experts can identify patterns and characteristics that are unique to human-generated content and distinguish it from AI-generated content.

Here are some ways in which human expertise can recognize AI-generated content through domain knowledge:

- Subject matter expertise: Experts in a particular domain have a deep understanding of the concepts, terminologies, and context specific to that domain. They can identify inconsistencies, inaccuracies, or errors in AI-generated content that may not be apparent to others. For instance, a medical expert can detect inconsistencies in an AI-generated medical report by recognizing inaccuracies in the diagnosis, treatment, or medication prescribed.

- Style and tone: Experts in a domain can also recognize the unique style and tone of human-generated content. For example, a literary expert can detect AI-generated content by recognizing inconsistencies in the tone, voice, or narrative structure of a novel or poem. Similarly, a legal expert can detect AI-generated legal documents by recognizing inconsistencies in the language, tone, or formatting that deviate from the standard practices in the legal profession.

- Creativity and originality: Human-generated content often reflects creativity and originality, which are challenging for AI to replicate. Experts in a domain can recognize the lack of originality or creativity in AI-generated content, which may indicate that it was generated by a machine rather than a human. For example, a music expert can detect AI-generated music by recognizing repetitive patterns or lack of emotional depth in the composition, which are not characteristic of human-generated music.

- Contextual understanding: Experts in a domain possess a nuanced understanding of the context in which human-generated content is created. They can identify inconsistencies in the context of AI-generated content, such as inappropriate use of jargon, out-of-context references, or irrelevant information. For instance, a history expert can detect AI-generated historical content by recognizing inaccuracies in the context or interpretation of historical events.

In conclusion, human expertise plays a critical role in recognizing AI-generated content through domain knowledge. By leveraging their extensive knowledge and experience in a particular domain, experts can detect inconsistencies, inaccuracies, and errors in AI-generated content that may not be apparent to others. This expertise is essential in ensuring the integrity and authenticity of content generated by AI systems, particularly in domains where accuracy and reliability are critical.

Ethical Implications of AI Deception

The Dark Side of AI: Manipulation, Misinformation, and Propaganda

The potential for AI to manipulate, spread misinformation, and engage in propaganda is a concerning aspect of its increasing capabilities. As AI systems become more advanced, they can be used to generate convincing fake news, deepfakes, and disinformation campaigns. These actions can have severe consequences, such as eroding trust in institutions, sowing discord, and even influencing elections.

- Fake News and Deepfakes: AI-generated fake news and deepfakes can spread rapidly through social media, deceiving people into believing false information. These manipulations can have far-reaching consequences, from influencing public opinion to shaping political landscapes.

- Disinformation Campaigns: AI-driven disinformation campaigns can target specific individuals or groups, spreading false narratives and manipulating opinions. These campaigns can be used to manipulate public opinion, undermine trust in institutions, and sow discord within society.

- Ethical Considerations: The use of AI for manipulation, misinformation, and propaganda raises significant ethical concerns. It challenges the responsibility of AI developers and users to ensure that AI is not misused for malicious purposes. It also highlights the need for societies to have open conversations about the ethical boundaries of AI and how to prevent its misuse.

- Regulating AI: Governments and regulatory bodies must consider the potential for AI to be used for manipulation, misinformation, and propaganda when drafting AI policies and regulations. They must ensure that AI is developed and deployed responsibly, with transparency and accountability, to prevent its misuse and protect the public interest.

- AI for Good: While AI can be used for malicious purposes, it also has the potential to be used for good. AI can help identify and combat fake news, deepfakes, and disinformation campaigns, contributing to a more informed and resilient society. By harnessing the power of AI for good, we can work towards a future where AI enhances, rather than undermines, our democracies.

Balancing AI Innovation with Ethical Boundaries

The Role of Ethics in AI Development

The rapid advancement of AI technology has raised numerous ethical concerns. As AI systems become increasingly sophisticated, it is crucial to consider the ethical implications of their actions. The integration of AI into various aspects of human life, such as healthcare, finance, and transportation, requires a thoughtful examination of the ethical boundaries that should be in place.

Ethical Frameworks for AI Systems

To address the ethical concerns surrounding AI, various frameworks have been proposed. These frameworks aim to provide guidelines for the development and deployment of AI systems while ensuring that they align with ethical principles. Some of the most prominent ethical frameworks for AI include:

- The Five Moral Principles: Developed by Simon Rogerson, this framework consists of five principles: beneficence, non-maleficence, autonomy, justice, and fidelity. These principles provide a foundation for ethical decision-making in AI systems.

- AI Ethics Index: Developed by AI Ethics Lab, this framework focuses on transparency, fairness, accountability, and explainability. The AI Ethics Index aims to help organizations measure their AI systems’ adherence to these principles.

- AI-100 Initiative: Launched by the IEEE, this initiative seeks to establish ethical guidelines for AI systems. The AI-100 initiative includes 100 questions designed to assess the ethical implications of AI technologies.

The Importance of Ethical Boundaries in AI Innovation

Ethical boundaries play a crucial role in guiding the development and deployment of AI systems. These boundaries help ensure that AI innovation is aligned with human values and do not result in unintended consequences. Some of the key ethical boundaries that should be considered in AI innovation include:

- Transparency: AI systems should be transparent in their decision-making processes. This transparency enables users to understand how AI systems arrive at their conclusions and allows for accountability.

- Fairness: AI systems should be designed to treat all users fairly, without discrimination based on factors such as race, gender, or socioeconomic status.

- Accountability: AI developers and operators must be held accountable for the actions of their systems. This accountability ensures that AI systems are developed and deployed responsibly.

- Privacy: AI systems must respect users’ privacy and protect their personal data. This includes adhering to data protection regulations and ensuring that user data is not misused.

- Explainability: AI systems should be able to provide explanations for their decisions. This explainability enables users to understand how AI systems arrived at their conclusions and facilitates trust in these systems.

Balancing Innovation and Ethical Boundaries

Balancing AI innovation with ethical boundaries is a challenging task. On one hand, AI innovation has the potential to revolutionize various industries and improve human lives. On the other hand, the lack of ethical boundaries could lead to unintended consequences, such as discrimination, privacy violations, and loss of accountability.

To achieve a balance between AI innovation and ethical boundaries, it is essential to involve a diverse range of stakeholders in the development and deployment of AI systems. This includes ethicists, policymakers, AI developers, and users. By engaging with these stakeholders, it is possible to identify potential ethical concerns and develop solutions that address these concerns while still promoting innovation.

Moreover, incorporating ethical frameworks and guidelines into the development process can help ensure that AI systems are designed with ethical considerations in mind. These frameworks can serve as a checklist for developers, helping them identify potential ethical concerns and address them proactively.

Ultimately, balancing AI innovation with ethical boundaries requires a

The Future of AI and Human Trust: Building Resilience against Deception

Establishing Trust in AI Systems

As AI technology continues to advance, it is essential to consider the impact of AI deception on human trust. To build resilience against deception, it is crucial to establish trust in AI systems. This can be achieved by implementing transparent and ethical AI development practices, as well as providing users with the necessary information to make informed decisions about the use of AI.

Improving AI Transparency

Improving AI transparency is critical to building trust in AI systems. By making AI algorithms and decision-making processes more transparent, users can better understand how AI systems work and the factors that influence their decisions. This increased transparency can help users identify potential biases and errors in AI systems, enabling them to make more informed decisions about the use of AI.

Promoting Ethical AI Development

Promoting ethical AI development is also essential to building trust in AI systems. This involves developing AI systems that prioritize user privacy, fairness, and accountability. By adhering to ethical principles, AI developers can ensure that AI systems are trustworthy and aligned with human values.

Fostering Collaboration between Humans and AI

Fostering collaboration between humans and AI is crucial to building resilience against deception. By working together, humans and AI can leverage each other’s strengths and create more effective and trustworthy AI systems. This collaboration can involve humans providing input and guidance to AI systems, as well as AI systems providing insights and analysis to help humans make more informed decisions.

Educating Users about AI

Educating users about AI is essential to building trust in AI systems. By providing users with the necessary information to understand AI technology and its limitations, they can make more informed decisions about its use. This education can include providing users with information about AI’s capabilities and limitations, as well as the potential risks and benefits of using AI.

Establishing Regulatory Frameworks for AI

Establishing regulatory frameworks for AI is also crucial to building trust in AI systems. By creating regulations that promote transparency, ethical development, and accountability, governments can help ensure that AI systems are trustworthy and aligned with human values. These regulations can also provide users with recourse in the event of AI deception or harm.

In conclusion, building resilience against deception in AI systems is essential to maintaining trust in AI technology. By improving AI transparency, promoting ethical AI development, fostering collaboration between humans and AI, educating users about AI, and establishing regulatory frameworks for AI, we can create more trustworthy and effective AI systems that align with human values.

The Future of AI-Human Interactions: Collaboration or Competition?

The Potential for Cooperation: AI and Human Intelligence Working Together

As artificial intelligence continues to advance, there is growing interest in exploring the potential for collaboration between AI and human intelligence. By combining the strengths of both, it is possible to achieve outcomes that neither could achieve alone. In this section, we will explore the ways in which AI and human intelligence can work together to achieve mutually beneficial outcomes.

One of the key benefits of AI is its ability to process vast amounts of data quickly and accurately. This makes it particularly useful in fields such as healthcare, where the amount of data can be overwhelming for human doctors to manage. By working together, AI can help doctors to identify patterns and trends in patient data that may be difficult to detect otherwise. This can lead to earlier detection of diseases and more effective treatments.

Another area where AI and human intelligence can work together is in creative fields such as art and music. While AI can generate new and interesting ideas, it is ultimately limited by its programming. By working with human artists, AI can help to refine and enhance their creations, leading to new and exciting forms of artistic expression.

In addition to these examples, there are many other areas where AI and human intelligence can work together to achieve mutually beneficial outcomes. As AI continues to advance, it is likely that we will see more and more examples of this kind of collaboration. By working together, AI and human intelligence can achieve outcomes that neither could achieve alone, leading to a brighter and more prosperous future for all.

The Threat of AI Dominance: The Risks of an AI-Driven Future

As AI continues to advance at an exponential rate, there is growing concern about the potential consequences of an AI-driven future. One of the most significant risks associated with AI dominance is the potential for AI systems to outsmart humans in various domains, leading to a loss of control over critical systems and infrastructure.

Here are some of the potential risks associated with AI dominance:

- Cybersecurity Threats: AI-powered cyber attacks could become increasingly sophisticated, making it difficult for humans to detect and defend against them. As AI systems become more intelligent, they could be used to launch more sophisticated and targeted attacks, putting critical infrastructure and sensitive data at risk.

- Job Displacement: As AI systems become more capable of performing tasks previously done by humans, there is a risk that many jobs could be automated, leading to widespread job displacement and economic disruption. This could exacerbate income inequality and create social unrest.

- Autonomous Weapons: The development of autonomous weapons, which could select and engage targets without human intervention, raises ethical concerns about the use of lethal force and the potential for AI systems to make decisions that could lead to unintended consequences.

- Bias and Discrimination: AI systems are only as unbiased as the data they are trained on, and there is a risk that AI systems could perpetuate and even amplify existing biases and discrimination. This could have serious consequences for marginalized communities and exacerbate social inequalities.

- Loss of Control: As AI systems become more intelligent and autonomous, there is a risk that humans could lose control over them, leading to unintended consequences and potentially catastrophic outcomes. This could be particularly dangerous in domains such as healthcare, where the stakes are high and mistakes could have serious consequences.

These are just a few examples of the potential risks associated with AI dominance. It is crucial that we take steps to mitigate these risks and ensure that AI is developed and deployed in a responsible and ethical manner. This includes investing in research to understand the potential consequences of AI systems, developing regulations and policies to govern their development and deployment, and ensuring that AI systems are transparent and accountable to the public. By working together to address these challenges, we can ensure that AI serves as a tool for human progress rather than a threat to our well-being.

Shaping the Future of AI: Human Values and Ethical Considerations

As AI continues to advance and reshape our world, it is crucial to consider the ethical implications of its development and implementation. As AI systems become more intelligent and autonomous, they will increasingly be faced with complex ethical dilemmas that require careful consideration and guidance. In this section, we will explore some of the key ethical considerations that must be taken into account when shaping the future of AI.

- Privacy and Security: One of the most pressing ethical concerns surrounding AI is the potential threat to privacy and security. As AI systems become more integrated into our daily lives, they will have access to a vast amount of personal data, including our online activity, health records, and financial information. It is essential to ensure that AI systems are designed with robust privacy and security measures to protect this sensitive data from being misused or compromised.

- Bias and Discrimination: Another ethical concern is the potential for AI systems to perpetuate and amplify existing biases and discrimination. AI systems are only as unbiased as the data they are trained on, and if that data is biased, the system will be too. It is essential to ensure that AI systems are designed with fairness and transparency in mind, and that they are regularly audited to identify and eliminate any biases.

- Accountability and Transparency: As AI systems become more autonomous, it becomes increasingly difficult to determine who is responsible for their actions. It is essential to establish clear lines of accountability and transparency in the development and deployment of AI systems to ensure that they are held to the same ethical standards as human beings.

- Values Alignment: Finally, it is crucial to ensure that AI systems are aligned with human values and ethics. As AI systems become more intelligent and autonomous, they may develop their own goals and values that are not aligned with those of human beings. It is essential to ensure that AI systems are designed to prioritize human values and ethics, and that they are regularly audited to ensure that they remain aligned with these values.

Overall, the ethical considerations surrounding AI are complex and multifaceted. It is essential to carefully consider these issues as we continue to develop and deploy AI systems, and to ensure that they are designed to prioritize human values and ethics. By doing so, we can ensure that AI is used to enhance and enrich our lives, rather than to diminish or threaten them.

FAQs

1. What is the Turing Test and how is it related to the question of whether AI can trick humans?

The Turing Test is a measure of a machine’s ability to exhibit intelligent behavior that is indistinguishable from that of a human. It is based on the idea that if a human evaluator cannot tell the difference between the responses of a machine and those of a human, then the machine can be said to have passed the test. The Turing Test is often used as a benchmark for evaluating the success of AI in mimicking human behavior. While some AI systems have passed the Turing Test, it is important to note that the test is not a definitive measure of intelligence and does not necessarily mean that an AI system is capable of outsmarting a human in all situations.

2. Can AI be programmed to deceive or trick humans?

Yes, AI can be programmed to deceive or trick humans. There are various techniques that can be used to achieve this, such as using natural language processing to generate convincing responses or using machine learning algorithms to adapt to the behavior of humans. However, it is important to note that the ethical implications of creating AI that is capable of deception are still being explored and debated by researchers and experts in the field.

3. What are some examples of AI systems that have been developed to trick humans?

There are several examples of AI systems that have been developed to trick humans. One well-known example is the AI bot Turing, which was designed to impersonate a teenage boy online and engage in conversations with humans. Another example is the AI program that was used to generate convincing fake news articles, which demonstrated the potential for AI to be used for malicious purposes. These examples highlight the potential for AI to be used to deceive and manipulate humans, and the importance of developing ethical guidelines and regulations to govern the use of AI.

4. How can humans detect when they are interacting with an AI system?

There are several clues that humans can look for to determine whether they are interacting with an AI system. One of the most obvious is the lack of human-like emotions and responses. AI systems are still limited in their ability to understand and respond to human emotions, so they may struggle to respond appropriately to certain situations. Additionally, AI systems may have a tendency to provide repetitive or predictable responses, which can be a giveaway that they are not human. However, as AI technology continues to advance, it may become increasingly difficult for humans to distinguish between AI and human interactions.